Out of sight, out of mind.

Forgetting what is out of sight was quite useful when living in the forest, hunting for food, but things are different now.

Organizations depend on Quality at Speed software to survive in the digital ecosystem, and forgetting important software activities impact quality and speed.

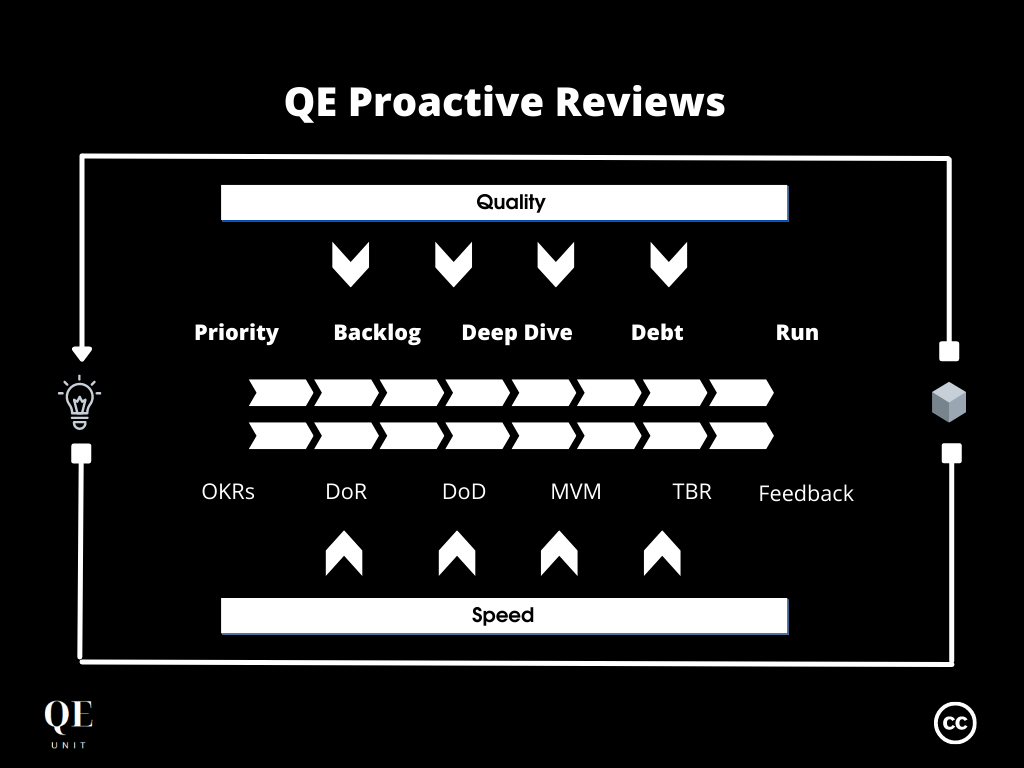

Organizations can use the counter force of Quality Engineering with QE Proactive Reviews to ensure that valuable activities are performed.

Follow the QE Unit for more Quality Engineering from the community.

What are QE Proactive Reviews

Reviews are an effective mechanism to improve the quality and speed with a minimal effort of coordination.

Many reviews are performed along the software lifecycle when deliverables are ready, injected in Definition of Ready or Definition of Done.

But many valuable activities are not part of the features flow.

QE Proactive Reviews focus on applying a systematic review of activities that tends to fall out of sight of teams, negatively impacting quality and speed.

These reviews are acting along the software lifecycle with:

- Priority review for planned versus actual OKRs

- Backlog review to prioritize and clean DoD and QE Notes

- Deep dive review to keep in sync with the reality

- Debt review in its various forms using backlog and decision records

- Run review analyzing changes, problems, incidents, and support.

Each review contributes to contain the complexity that naturally accumulates leveraging regular interactions on important tasks.

These practices are challenging to sustain for organizations that easily focus on accelerating features delivery to survive in the marketplace.

That’s why Quality Engineering is necessary for Quality at Speed software.

Why using QE Proactive Reviews in Quality Engineering

Quality Engineering is the paradigm constraining the entire software lifecycle to the imperative of quality and speed.

Its implementation relies on the five domains of MAMOS from Methods, Architecture, Management, Organization, and Skills.

QE Proactive Reviews are part of the Methods to systematically review and prioritize activities that else, fall out of sight.

How does QE Proactive Reviews contribute to Quality

Quality attributes are easily forgotten when there is a pressure to deliver features as fast as possible.

Teams need a systematic way to review identified quality attributes and their effective planning or implementation.

QE Proactive reviews are exactly used to create a continuous force through regular evaluation of quality to adapt the ongoing plans.

QE Proactive Reviews contribute to Quality being:

- Result-driven in prioritizing activities that are reviewed

- Systematic with a defined frequency and process for review

- Scalable to different activities and extensible to many teams.

How does QE Proactive Reviews contribute to Speed

Reviews can be seen as a loss of time for teams that want to iterate on the product with minimal interruption and non-productive tasks.

Entropy is the natural tendency of a system to accumulate complexity over-time, where a software system is not an exception.

Additionally, focusing on speed alone in the short-term leads to an accumulation of debt which in the end slows down the entire software lifecycle with structuring issues.

Teams require to regularly review the on-going flow of activities to focus on the most valuable ones, reducing the accumulated complexity and debt.

QE Proactive Reviews contribute to Speed with:

- Focus in prioritizing main deliverables in the review

- Asynchronicity by supporting decoupled interactions

- Rhythm with the defined frequency of reviews

- Visibility that clarifies which activities were selected.

How to start with QE Proactive Reviews in QE

QE Proactive reviews can be set up progressively from day one, adding most valuable reviews step by step.

Effective reviews share the following characteristics:

- Owned by someone

- Scheduled systematically

- Time-boxed for focus

- Prepared beforehands

- Actionable outcomes.

The priority of implementation depends on your starting point, and where you have more complexity and risks.

It is, however, recommended to start by the end of the software lifecycle to get the actual picture of the software, to then perform an upstream alignment.

In the book The Phoenix Project, one effective practice for flow is in fact to stop all ongoing projects to then minimally prioritize the most valuable ones.

There are here ordered along the software lifecycle:

- Priority review

- Backlog review

- Deep dive review

- Debt review

- Run review.

Priority review

Priorities must be reviewed where they are happening.

Software organizations usually plan the work at various levels, from the global business strategy to cross-functional teams.

Some organizations rely on dedicated software, others on slides, or other alternatives with a more or less formalized OKRs process.

Implement the priority review following these steps:

- Identify prioritization mechanisms in place

- Select which instances can have more impact

- Collect the planned priorities and actual results

- Align with stakeholders the need for reviews

- Organize and prepare the review meeting

- Execute collaboratively the review

- Generate actionable outputs with focus

- Make sure outputs become outcomes and share.

The described process can be done iteratively among teams and existing review mechanisms.

If nothing is in place, light OKRs are recommended.

Backlog review

The backlog of tasks cascades from the original priorities definition.

It is the referential of tasks in progress, completed and to perform for teams, acting as a source of truth for what needs to be done.

Backlogs have the tendency to become out of date, with new tasks that are not necessarily valuable, ending in the debt and noise to clean.

Reviewing backlogs require to:

- Identify existing teams backlogs

- Select most valuable backlogs to address first

- Ask the team to update the backlog to be up-to-date

- Book the review on a regular basis, ideally every week

- Prepare the meeting by flagging tasks to be discussed

- Execute the review to remove, keep, push for later tasks

- Share the update backlog to relevant stakeholders.

This process can be applied to both user stories backlog and QE Notes.

Deep dive review

This review focuses more on reviewing the content of a particular theme rather than prioritizing a list.

Its objective is to share a narrowed subject to the relevant stakeholders to improve alignment, understanding and future support.

Decisions can be taken to approve a direction, options, or even to give inputs on something that is being worked on.

This exercise is also a good opportunity to create relationships between stakeholders of different teams and levels, and promote individuals.

Deep dive are implemented with the following steps:

- Define the deep dive perimeter (domain, infrastructure, …)

- Identify the initial relevant stakeholders

- Select the leader owners of the instance

- Initiate a backlog sharing with peers

- Schedule deep dives every 2 to 3 weeks

- Execute deep dive and keep actionable notes

- Document and share the summary.

This process enables a regular push of information for what’s happening in reality to a variety of stakeholders, and performs early adjustments.

Debt review

Many forms of debts end up in systems over-time, from organization, methods, to technology.

Regular reviews identify debt priorities to address, that otherwise slowly grow in the background, until they have much more negative impacts.

Debt reviews must be executed in a defined context to be precise and effective locally, while prioritizing debt exercises at the big picture to maximize outcomes.

Put in place debt reviews with these actions:

- Define domains and teams to review

- Confirm the scope of debt (technology, organizational, …)

- Clarify the meta-data required for debt items

- Prepare the meeting collecting debt items as a backlog

- Schedule debt review at a defined frequency

- Execute the meeting with a maximum list of items to keep

- Document the meeting notes and put debt items in backlogs.

Debt review can be prepared using existing backlogs of tasks, bugs, and other lists such as architecture decision records, operations.

Run review

The last review is located at the heart of the digital experience, in the operations.

A software system is suffering continuous changes that generate an amount of information useful for preparing the reviews.

Alerts, changes, problems, incidents, and customer complaints are all useful sources of information to know the actual state of the software.

Put in place run reviews by following these steps:

- Define teams and domains with most value

- Define the first scope of run reviews (incidents, support)

- Prepare the meeting collecting and analyzing data

- Schedule the reviews with identified stakeholders

- Execute the review pushing for actionable items

- Transform the meeting notes in backlog items for action

- Make sure to have owners for each item until the next meeting.

Similarly to the other reviews, run reviews can be implemented progressively where you have most stakes, before expanding gradually.

QE Proactive Reviews within MAMOS

Organizations able to continuously deliver Quality at Speed software master the implementation of proactive reviews.

They understood that focusing on the main flow is not enough, posting regularly on their site important activities to keep the rhythm in the long run.

Succeeding in the digital marketplace is about running a continuous marathon of sprints, where speed matters in the short and the long-term.

QE Proactive Reviews is implemented by Quality Engineering teams that understand the value of constraining their lifecycle to Quality and Speed.

How proactive are you gonna be?

References

Gene Kim, Kevin Behr, George Spafford (2013), The Phoenix Project: A Novel about IT, DevOps, and Helping Your Business Win. IT Revolution Press.

Scott Berkun (2008), Making Things Happen: Mastering Project Management, O’Reilly Media.