An external piece of eyes is always helpful.

And when these eyes are from a peer able to improve the quality and speed of our deliverables, it is even more interesting.

Peer reviews are an effective practice popularized in software with methodologies such as agile and extreme programming.

One difficulty for teams is to define which reviews and when to perform them to keep iterating with quality and speed.

This article shares three just-in-time peer reviews you need for creating a Quality Engineering ecosystem.

Follow the QE Unit for more exclusive QE content from the community.

What are Just-in-Time Peer Reviews

Peer review is a habit of effective software teams systematically reviewing deliverables with one or more peers to get quick feedback for improvement.

The advantage of being done with peers in an informal setup is the balance between time, effort, and the value obtained.

Studies demonstrated that feedback from 5 persons enable to spot 80% of the defects.

With limited resources, which reviews to perform and the number of reviewers depend on what are the stakes and risks involved.

Alternatives to peer reviews are to don’t review, which have high risks, or implement a heavy governance limiting flow and that does not necessarily improve that much quality.

Peer reviews can be done with one to multiple peers and performed at a defined frequency or when a deliverable is ready, in just-in-time.

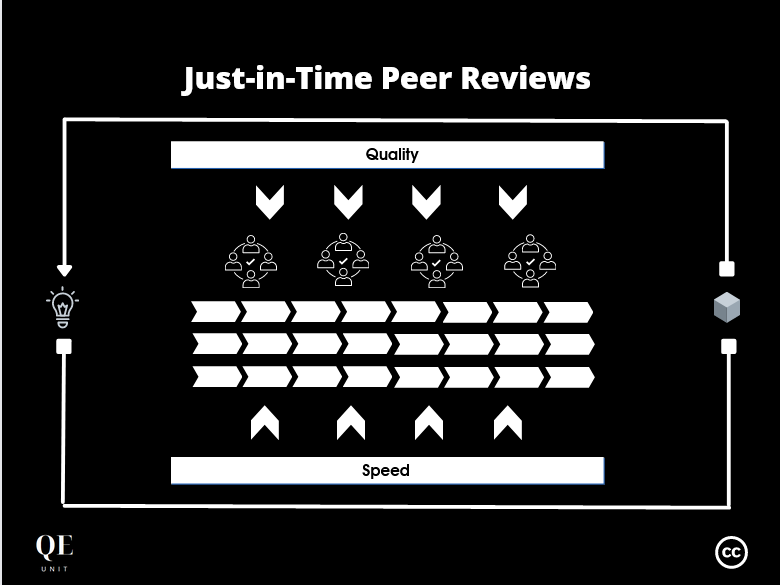

Just-in-Time Peer Reviews in Quality Engineering is the progressive implementation of peer reviews of QE practices along the software lifecycle.

The first category of QE deliverables review is to perform when each output of the framework is produced such as OKRs, 1-Slide Problem Summary, QE Notes.

On the other hand, code review and demo are required for the first Minimum Releasable Code and following Quality Engineering Software Increments.

Let’s see how Just-in-Time Peer Reviews are useful for Quality at Speed software.

Why using Just-in-Time Peer Reviews in Quality Engineering

Quality Engineering is the paradigm constraining the entire software lifecycle to continuously deliver Quality at Speed software.

Its implementation relies on progressive methodologies to implement along with the growing maturity of the organization.

Just-in-Time Peer Reviews are part of the essential methods to implement jointly with the remaining domains of MAMOS, from architecture to skills.

How Just-in-Time Peer Reviews contribute to Quality?

Meeting the quality attributes of a software is supported by ensuring the level of quality at every stage of the software lifecycle.

Teams that systematically review deliverables when they are ready can get quick feedback with low cost of execution and of correcting defects.

Additionally, the informal setup of reviews pushes for transparency and trust among the teams, allowing them to improve their collaboration over time.

Just-in-Time Peer Reviews contribute to Quality being:

- Result-oriented on concrete deliverables to review for improvements

- Systematic being executed when each deliverable is ready, with low latency

- Scalable as deployable among many types of deliverables and teams.

How Just-in-Time Peer Reviews contribute to Speed?

The required speed in Quality Engineering is like a continuous marathon of sprints: short and frequent increments maintaining the frequency is the long run.

Delivering on a continuous basis requires to maintain an organization focus through an established rhythm and visibility, something peer reviews contribute directly.

Just-in-Time Peer Reviews contribute to Speed with:

- Focus on delivering and reviewing important deliverables

- Rhythm in being systematically performed when deliverables are ready

- Asynchronicity supporting remote and decoupled interactions

- Visibility being integrated into the flow of work with DoR and DoD.

How to start with Just-in-Time Peer Reviews in Quality Engineering?

Organizational changes require to successfully implement gradual transitions, starting by aligning the organization to then roll-out the new practices.

Implementing Just-in-Time Peer Reviews is about creating an organizational habit based on the following principles:

- Systematically assess the need for review

- Perform review while deliverable is still “hot”

- Keep the format informal for transparency

- Timebox reviews to 30 minutes by default

- Record reviews notes for DoD or QE Notes

- Adapt the number of reviewers depending on stakes & risks..

From there you can implement reviews starting with a team working on valuable increments, and with more openness and probability of success.

The implementation order can vary in your context depending on your maturity and level of risks along the software lifecycle.

The following reviews are useful in all cases:

- OKRs to foster an organizational alignment on what matters

- 1-Slide Problem Summary to make sure people solve the right problem

- QE Code review to get early feedback and adaption of the code

- QE Demo review to maintain rapid alignment between stakeholders.

QE Deliverables review consists in systematically reviewing one of the Quality Engineering framework deliverables when it’s ready.

Follow the following steps as an iterative process:

- Identify most valuable software domains to invest in

- Identify candidate team(s) in that perimeter

- Map out implemented QE practices (e.g. MRC, TBR)

- Share methodology guidelines

- Make sure to add review in the practice’s DoD

- Support the team in the first execution

- Use tasks to follow the reviews pipeline

- Improve review outputs using QE notes and enriched DoD

- Document and share best practices to expand

- Plan a review every 3 months for improvements and deployment.

This process is used repeatedly over-time to gradually increase the maturity of the organization.

Just-in-Time Peer Reviews within MAMOS

Peer reviews are an effective way to constrains the software lifecycle to Quality at Speed software balancing risks and effort.

The implementation at critical stages of the lifecycle in just-in-time is what supports rapid iterations to maximum the value delivered within resources constraints.

The integration of Just-in-Time reviews within the other practices of the MAMOS framework demonstrates the interest to invest on the entire system.

Quality Engineering is about playing the long game to reinvent organizations while still winning victories along the way with short increments.

Which QE review will you start with?

References

Extreme Programming, Agile Alliance.

Sandro Mancuso, The Software Craftsman: Professionalism, Pragmatism, Pride. Prentice Hall.

Peter McBreen (2011), Software Craftsmanship: The New Imperative: The New Imperative. Addison-Wesley Professional.